Difference between revisions of "TensorFlow"

From Christoph's Personal Wiki

(→Introduction) |

(→References) |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 41: | Line 41: | ||

** Layers | ** Layers | ||

** Estimators | ** Estimators | ||

| + | |||

| + | ; Loss functions | ||

| + | * Differentiatable functions that measure differences/error between true and predicted values | ||

| + | * Common types: | ||

| + | ** [[:wikipedia:Mean squared error|Mean squared error]] (MSE) | ||

| + | ** [[:wikipedia:Cross_entropy#Cross-entropy_error_function_and_logistic_regression|Log loss]] | ||

| + | ** [[:wikipedia:Cosine similarity|Cosine distance]] | ||

| + | ** [[:wikipedia:Cross entropy|Cross entropy]] | ||

| + | |||

| + | ; Optimizers | ||

| + | * Optimizers are algorithms that minimize the loss (or error) of a model | ||

| + | * Local minimum vs. global minimum | ||

| + | * Built-in optimizers inherit from the Optimizer class | ||

| + | * Common types: | ||

| + | ** Gradient descent | ||

| + | ** Adam | ||

| + | ** RMSProp | ||

| + | ** Adagrad | ||

| + | ** Momentum | ||

| + | ** Adadelta | ||

| + | |||

| + | ; Layers | ||

| + | * What are they? | ||

| + | ** Composed of tensors and operations forming the model | ||

| + | ** Generally connected in series | ||

| + | ** Pre-made functions for creating layers in a model | ||

| + | * Common types: | ||

| + | ** Input | ||

| + | ** Convolutional (1d, 2d, 3d) | ||

| + | ** Pooling | ||

| + | ** Dropout | ||

| + | ** Dense | ||

| + | |||

| + | ; Estimators | ||

| + | * Training | ||

| + | * Evaluation | ||

| + | * Prediction | ||

| + | * Build Graph | ||

| + | |||

| + | ==Examples== | ||

| + | |||

| + | * Basic #1 | ||

| + | <pre> | ||

| + | import tensorflow as tf | ||

| + | |||

| + | m = tf.constant(3.0, name='m') | ||

| + | b = tf.constant(1.5, name='b') | ||

| + | x = tf.placeholder(dtype='float32', name='x') | ||

| + | y = m*x + b | ||

| + | |||

| + | sess = tf.Session() | ||

| + | |||

| + | y.eval({x: 2}, session=sess) # => 7.5 | ||

| + | </pre> | ||

| + | |||

| + | * Basic #2 | ||

| + | <pre> | ||

| + | import tensorflow as tf | ||

| + | |||

| + | M = tf.constant([[1,2], [3,4]], dtype='float32') | ||

| + | v = tf.constant([5,6], dtype='float32') | ||

| + | sess = tf.Session() | ||

| + | |||

| + | sess.run(M + v) | ||

| + | # array([[ 6., 8.], | ||

| + | # [ 8., 10.]], dtype=float32) | ||

| + | |||

| + | sess.run(M * v) | ||

| + | # array([[ 5., 12.], | ||

| + | # [15., 24.]], dtype=float32) | ||

| + | |||

| + | sess.run(tf.matmul(M, tf.reshape(v, [2, 1]))) | ||

| + | # array([[17.], | ||

| + | # [39.]], dtype=float32) | ||

| + | </pre> | ||

| + | |||

| + | ==Machine learning== | ||

| + | |||

| + | ; Machine learning lifecycle | ||

| + | # Define objective | ||

| + | # Collect data | ||

| + | # Data cleaning | ||

| + | # Exploratory Data Analysis (EDA) | ||

| + | # Data processing | ||

| + | # Train/evaluate models | ||

| + | # Deploy | ||

| + | # Monitor results | ||

| + | |||

| + | ; Machine learning lifecycle example | ||

| + | |||

| + | * 1. Define objective | ||

| + | : Infer how IQ, years experince, and age affects income using linear model | ||

| + | |||

| + | * 2. Collect data | ||

| + | <pre> | ||

| + | import tensorflow as tf | ||

| + | import numpy as np | ||

| + | |||

| + | import pandas as pd | ||

| + | from pandas import DataFrame as DF | ||

| + | |||

| + | # Create dataset | ||

| + | np.random.seed(555) | ||

| + | X1 = np.random.normal(100, 15, 200).astype(int) # IQ | ||

| + | X2 = np.random.normal(10, 4.5, 200) # years experience | ||

| + | X3 = np.random.normal(32, 4, 200).astype(int) # age | ||

| + | dob = np.datetime64('2017-10-31') - 365*X3 | ||

| + | b = 5 | ||

| + | er = np.random.normal(0, 1.5, 200) # noise/error | ||

| + | |||

| + | Y = np.array([0.3*x1 + 1.5*x2 + 0.83*x3 + b + e for x1,x2,x3,e in zip(X1,X2,X3,er)]) | ||

| + | </pre> | ||

| + | |||

| + | * 3. Data Cleaning | ||

| + | <pre> | ||

| + | cols = ['iq', 'years_experience', 'dob'] | ||

| + | df = DF(list(zip(X1,X2,dob)), columns=cols) | ||

| + | df['income'] = Y | ||

| + | df.info() | ||

| + | # <class 'pandas.core.frame.DataFrame'> | ||

| + | # RangeIndex: 200 entries, 0 to 199 | ||

| + | # Data columns (total 4 columns): | ||

| + | # iq 200 non-null int64 | ||

| + | # years_experience 200 non-null float64 | ||

| + | # dob 200 non-null datetime64[ns] | ||

| + | # income 200 non-null float64 | ||

| + | # dtypes: datetime64[ns](1), float64(2), int64(1) | ||

| + | # memory usage: 6.3 KB | ||

| + | |||

| + | df.describe() | ||

| + | # Remove any negative values for years of experience | ||

| + | df = df[df.years_experience >= 0] | ||

| + | df.describe() # no more negative values | ||

| + | </pre> | ||

| + | |||

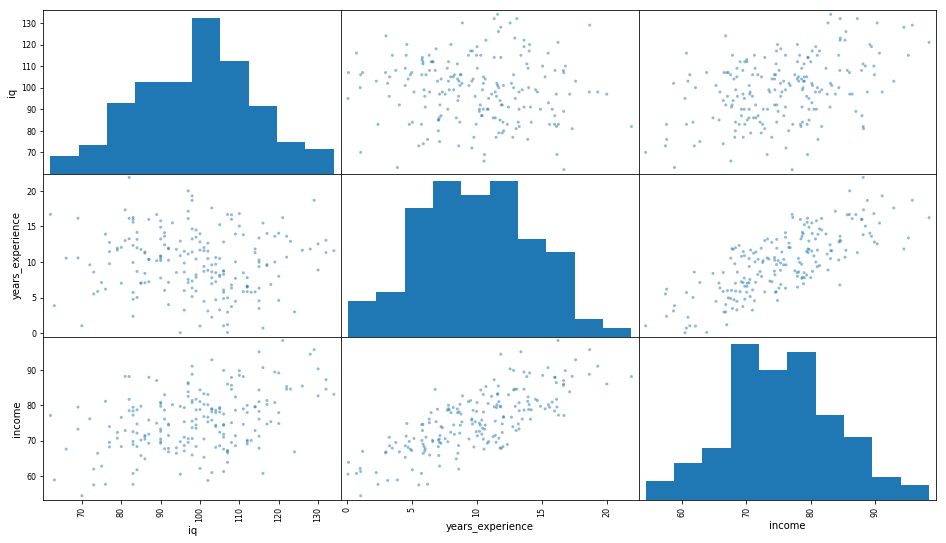

| + | * 4. EDA | ||

| + | <pre> | ||

| + | df.describe(include=['datetime64']) | ||

| + | |||

| + | import matplotlib.pyplot as plt | ||

| + | %matplotlib inline | ||

| + | |||

| + | pd.plotting.scatter_matrix(df, figsize=(16,9)); | ||

| + | </pre> | ||

| + | [[File:Tensorflow iq scatter matrix.png]] | ||

==References== | ==References== | ||

Latest revision as of 01:34, 30 April 2018

TensorFlow is an open-source software library for dataflow programming across a range of tasks. It is a symbolic math library, and is also used for machine learning applications such as neural networks.[1]

Introduction

- Tensors

- N-dimensional arrays

- Measured by "rank"

- All elements are same datatype

# Rank 0: [1] # Rank 1: [1][2][3] # Rank 2: [1][2][3] [4][5][6] # Rank 3 (3D): [1][2][3] [4][5][6] [7][8][9]

- Tensor operations

- Addition and subtraction

- Multiplication and Division

- Matrix multiplication

- Dot product

- Transpose

[1 2 3 4 ] [1 5 9 ]T

|5 6 7 8 | = |2 6 10|

[9 10 11 12] |3 7 11|

[4 8 12]

- TensorFlow building blocks

- Lower level

- Tensors

- Operations

- Graphs and sessions

- Higher level

- Loss functions

- Optimizers

- Layers

- Estimators

- Loss functions

- Differentiatable functions that measure differences/error between true and predicted values

- Common types:

- Optimizers

- Optimizers are algorithms that minimize the loss (or error) of a model

- Local minimum vs. global minimum

- Built-in optimizers inherit from the Optimizer class

- Common types:

- Gradient descent

- Adam

- RMSProp

- Adagrad

- Momentum

- Adadelta

- Layers

- What are they?

- Composed of tensors and operations forming the model

- Generally connected in series

- Pre-made functions for creating layers in a model

- Common types:

- Input

- Convolutional (1d, 2d, 3d)

- Pooling

- Dropout

- Dense

- Estimators

- Training

- Evaluation

- Prediction

- Build Graph

Examples

- Basic #1

import tensorflow as tf

m = tf.constant(3.0, name='m')

b = tf.constant(1.5, name='b')

x = tf.placeholder(dtype='float32', name='x')

y = m*x + b

sess = tf.Session()

y.eval({x: 2}, session=sess) # => 7.5

- Basic #2

import tensorflow as tf M = tf.constant([[1,2], [3,4]], dtype='float32') v = tf.constant([5,6], dtype='float32') sess = tf.Session() sess.run(M + v) # array([[ 6., 8.], # [ 8., 10.]], dtype=float32) sess.run(M * v) # array([[ 5., 12.], # [15., 24.]], dtype=float32) sess.run(tf.matmul(M, tf.reshape(v, [2, 1]))) # array([[17.], # [39.]], dtype=float32)

Machine learning

- Machine learning lifecycle

- Define objective

- Collect data

- Data cleaning

- Exploratory Data Analysis (EDA)

- Data processing

- Train/evaluate models

- Deploy

- Monitor results

- Machine learning lifecycle example

- 1. Define objective

- Infer how IQ, years experince, and age affects income using linear model

- 2. Collect data

import tensorflow as tf

import numpy as np

import pandas as pd

from pandas import DataFrame as DF

# Create dataset

np.random.seed(555)

X1 = np.random.normal(100, 15, 200).astype(int) # IQ

X2 = np.random.normal(10, 4.5, 200) # years experience

X3 = np.random.normal(32, 4, 200).astype(int) # age

dob = np.datetime64('2017-10-31') - 365*X3

b = 5

er = np.random.normal(0, 1.5, 200) # noise/error

Y = np.array([0.3*x1 + 1.5*x2 + 0.83*x3 + b + e for x1,x2,x3,e in zip(X1,X2,X3,er)])

- 3. Data Cleaning

cols = ['iq', 'years_experience', 'dob'] df = DF(list(zip(X1,X2,dob)), columns=cols) df['income'] = Y df.info() # <class 'pandas.core.frame.DataFrame'> # RangeIndex: 200 entries, 0 to 199 # Data columns (total 4 columns): # iq 200 non-null int64 # years_experience 200 non-null float64 # dob 200 non-null datetime64[ns] # income 200 non-null float64 # dtypes: datetime64[ns](1), float64(2), int64(1) # memory usage: 6.3 KB df.describe() # Remove any negative values for years of experience df = df[df.years_experience >= 0] df.describe() # no more negative values

- 4. EDA

df.describe(include=['datetime64']) import matplotlib.pyplot as plt %matplotlib inline pd.plotting.scatter_matrix(df, figsize=(16,9));

References

- ↑ "TensorFlow: Open source machine learning" "It is machine learning software being used for various kinds of perceptual and language understanding tasks" — Jeffrey Dean, minute 0:47 / 2:17 from Youtube clip